About me

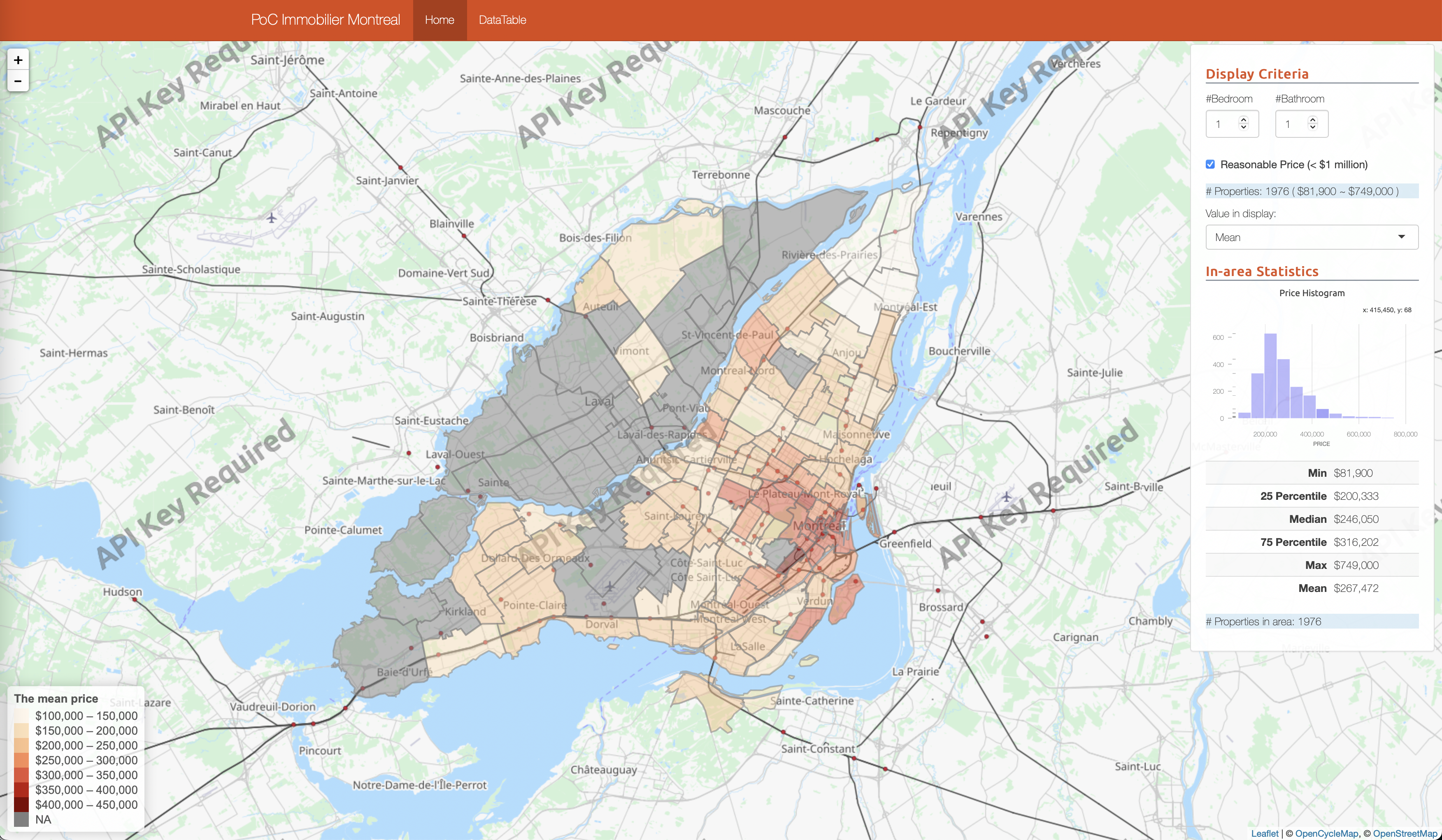

I am currently working in Montreal, Canada as a principal data scientist.

I deeply believe Data Science is about to provide a data-based solution by converting business questions to mathematical problems and applying state-of-art and renovated methodology.

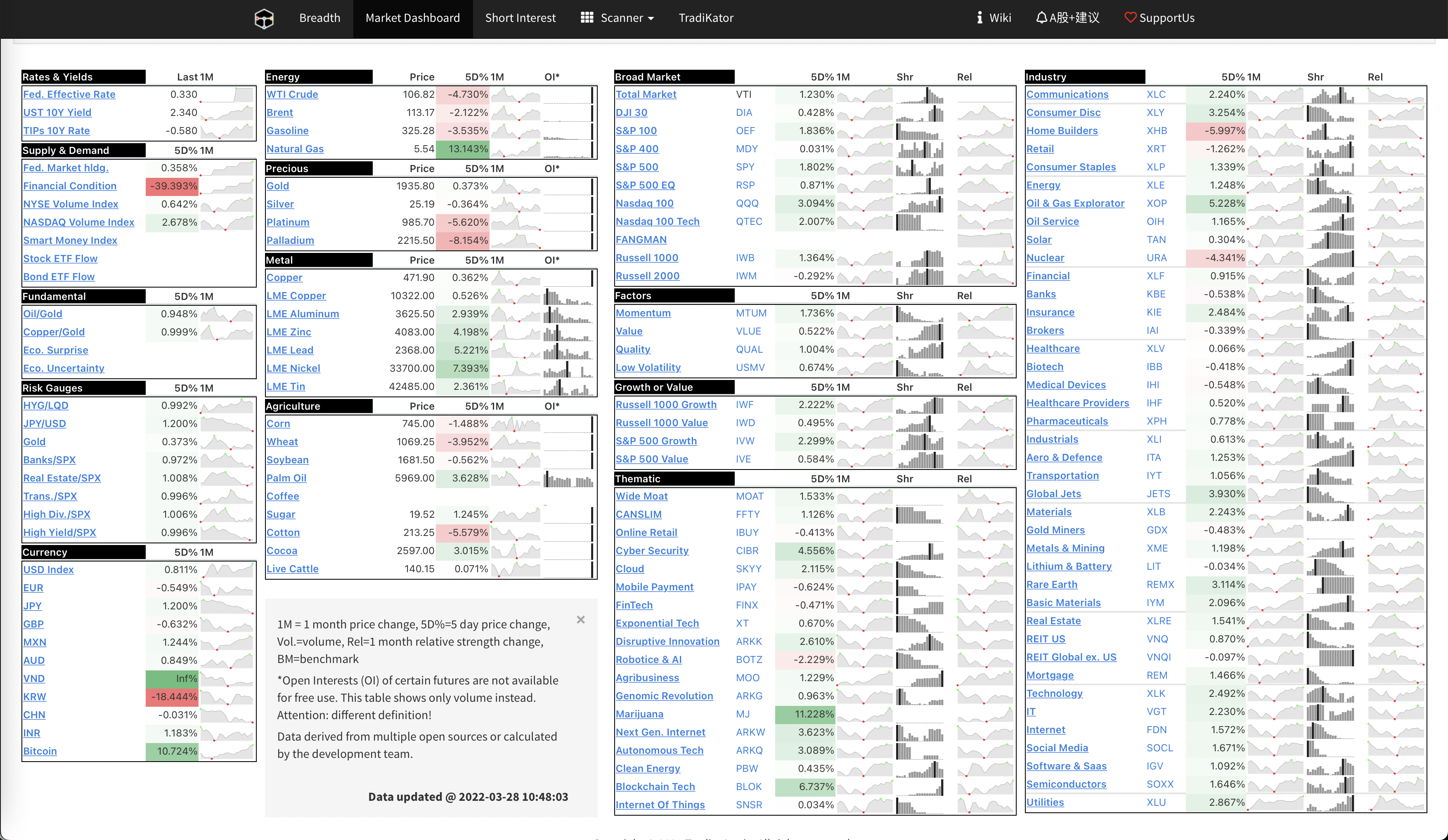

After-work-life: Investment, Photography, Polaroid, Astronomy & Geography

CV Memo